Journey into Developing an Autonomous Drone System for GPS-Denied Flight

Introduction

In today's world, drones are revolutionizing numerous fields, from delivery services to intricate inspections of hard-to-reach areas. But what happens when a drone loses its GPS signal? At The Rubic, we set out on a mission to develop an autonomous drone capable of continuing its operation even in GPS-denied environments. This is the story of our journey, a journey filled with excitement and challenges, as we strive to create a fail-safe navigation system for drones.

Getting Acquainted with the Challenge

Our initial strategy was to familiarize ourselves with the environment and the resources available to us. I assembled a team to comprehensively understand the requirements for an autonomous drone, considering aspects such as vision, control, sensors, and computing. Our journey began with the testing of the cameras and sensors that are integral to our drone's autonomous capabilities.

Camera and Mapping Tests

We started with a simple yet essential task: Integrating vision-based autonomy and selecting a variety of cameras for different tasks. Some included navigation, some for object detection, and some for the drone's task performance, for example, to detect damage, barcodes, boxes, etc., and figuring out the proper specification for the entire system itself.

Next, we moved on to mapping. Another team member installed the drone on a metallic trolley and began mapping using a fisheye camera. Despite some initial challenges, we successfully mapped the test area, ensuring our system could handle real-world conditions. We started simply by putting cameras on a trolly and understanding its mapping and associated challenges. Many challenges right at the start, though.

Once we solved initial challenges, we then moved to more advanced scenarios for mapping and autonomy.

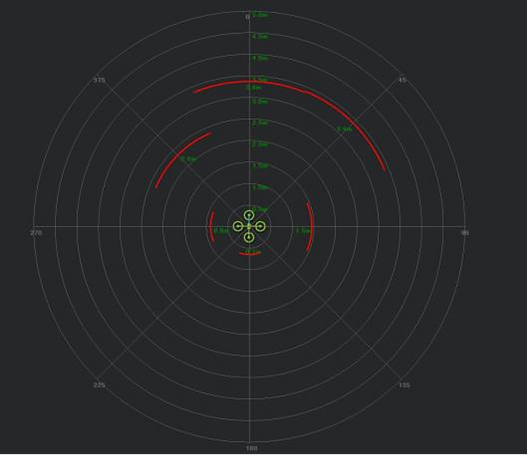

Proximity Sensor Testing

The initial sensor package included a Cygbot 2D Lidar and several TFMini Plus Lidars. Testing these sensors was critical to ensure they could provide accurate readings for obstacle avoidance during flight.

Chicken or Egg?

There's always a "chicken or egg" situation in drone development. Initially, you select all the systems you want on the drone, but then, when you start looking into their power usage, you quickly realize the need for a larger battery and, therefore, a heavier drone. Consequently, you need a larger drone, which may push you out of the confidence zone for size and wind resistance or exceed the specifications for the application.

First Flights and Manual Inspections

After the foundational tests were completed, we progressed to manual flight and inspection tests. The goal was to fine-tune the drone's flight parameters and ensure that it could reliably perform manual inspections.

Setting Up and Testing

The test procedures included setting up the test area, installing markers, and scaling the map with these markers. We then "walked" the drone to confirm its positioning. Our first flight test involved basic maneuvers like take-off, static positioning, and XY stability. Each test provided critical data to adjust the drone's settings for optimal performance.

Light Sequence Simulation

We conducted a series of flight sequence simulations to test the drone's stability and responsiveness. This involved flying the drone to various points, performing lateral flights, and monitoring battery levels and motor temperatures.

Failure is a big part of the learning

Failure is always a big part of learning. Although painful on the surface, there is a lot to learn underneath.

Implementing the Fail-Over System

One of the most exciting parts of our journey was developing the fail-over system using an IR-seeker. This system would allow the drone to navigate and land safely even if it lost its main localization system.

The IR-Seeker Method

One of the most exciting parts of our journey was developing the fail-over system using an IR-seeker. This system would allow the drone to navigate and land safely even if it lost its main localization system.

Overcoming Challenges

Throughout our development journey, we encountered and overcame numerous challenges. From ensuring accurate sensor readings to handling power management issues, each obstacle taught us valuable lessons.

Understanding Autonomous Flight and Vision Systems

Autonomous flight is the capability of a drone to fly without human intervention, using a combination of sensors, algorithms, and control systems to navigate and complete its mission. In GPS-denied environments, this becomes particularly challenging, requiring alternative methods for localization and navigation.

Vision-Based Navigation

Vision-based navigation is one of the most promising approaches to achieving autonomy in GPS-denied environments. This involves using cameras and computer vision algorithms to interpret the environment and make real-time decisions about the drone's position and trajectory.

Key Components of Vision Based Navigation

-

Cameras and Sensors: High-resolution cameras capture detailed images of the surroundings. These can include monocular cameras, stereo cameras, or depth sensors.

-

Feature Detection and Matching: Algorithms identify and match key features in consecutive images to estimate the drone's movement.

-

Visual Odometry: This process estimates the drone's motion by analyzing changes in camera images over time. It also estimates the drone's velocity and position relative to its starting point.

-

SLAM (Simultaneous Localization and Mapping): SLAM algorithms build a map of the environment while simultaneously tracking the drone's location within that map. This is crucial for navigating unknown or dynamic environments.

-

Obstacle Detection and Avoidance: Vision systems help detect obstacles in the drone's path and adjust its trajectory to avoid collisions. Techniques like optical flow and depth estimation are used to perceive the 3D structure of the environment.

Benefits of Vision-Based Navigation

-

Independence from GPS: Vision-based systems do not rely on external signals, making them ideal for indoor or GPS-denied environments.

-

Rich Environmental Understanding: Cameras provide a wealth of environmental information, enabling complex tasks like object recognition and interaction.

-

Cost-Effectiveness: With advancements in computer vision, high-performance cameras and processing units have become more affordable, making vision-based navigation a viable option for many applications.

Challenges and Future Directions

While vision-based navigation offers many benefits, it also presents challenges such as:

-

Processing Power: Real-time image processing requires significant computational resources.

-

Lighting Conditions: Performance can be affected by varying lighting conditions, shadows, and reflections.

-

Dynamic Environments: Rapid changes in the environment, such as moving objects, can complicate navigation.

Future developments aim to address these challenges by improving algorithms, integrating additional sensors (like LiDAR), and leveraging machine learning to enhance the drone's perception and decision-making capabilities

Conclusion and Future Steps

Our journey in developing an autonomous drone system for GPS-denied flight was both challenging and rewarding. Through rigorous testing and continuous improvements, we developed a robust fail-over system that ensures the drone can continue its mission even when GPS signals are lost.

There is a lot to the detailed story, but I tried to provide some highlights on the journey. I want to thank my close friends Patrick Poirier, Auguste Lalande, and Tim Johnson, who were with me along this development journey.

As we move forward, we plan to refine our system further and explore additional functionalities to enhance the drone's autonomy and reliability. Stay tuned for more updates on our exciting journey!